Description

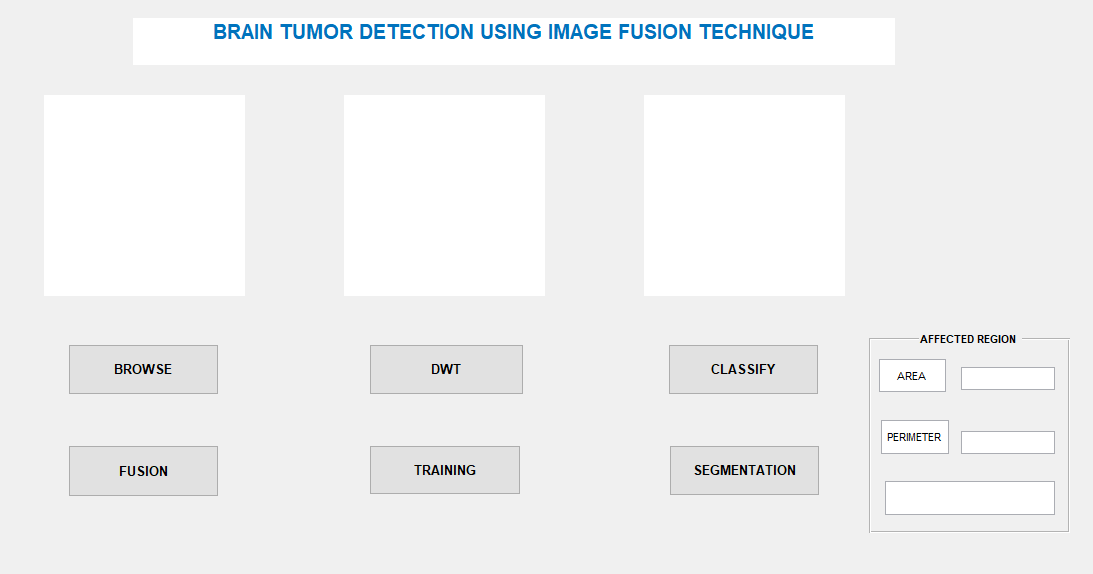

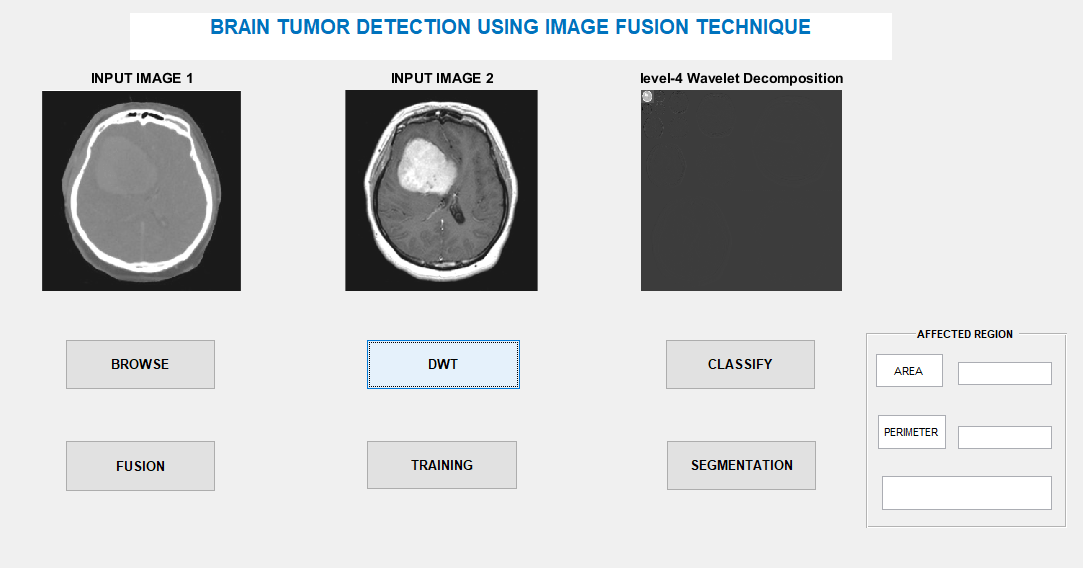

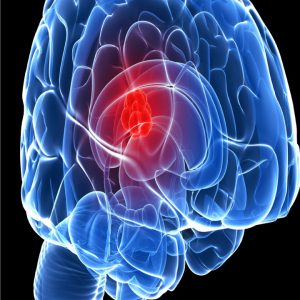

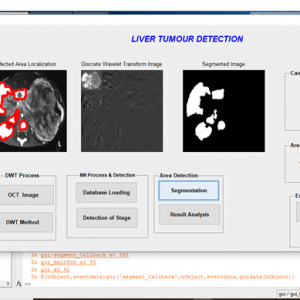

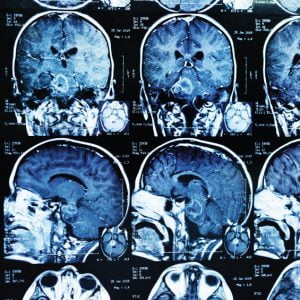

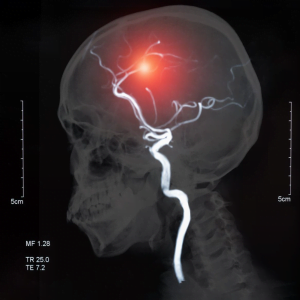

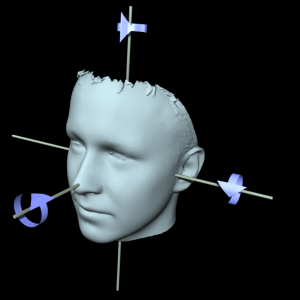

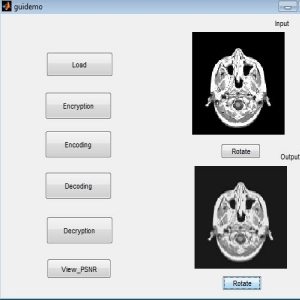

Brain Tumour Detection With Image Fusion

ABSTRACT

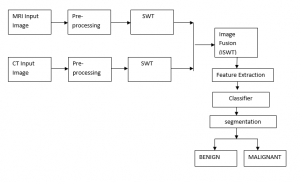

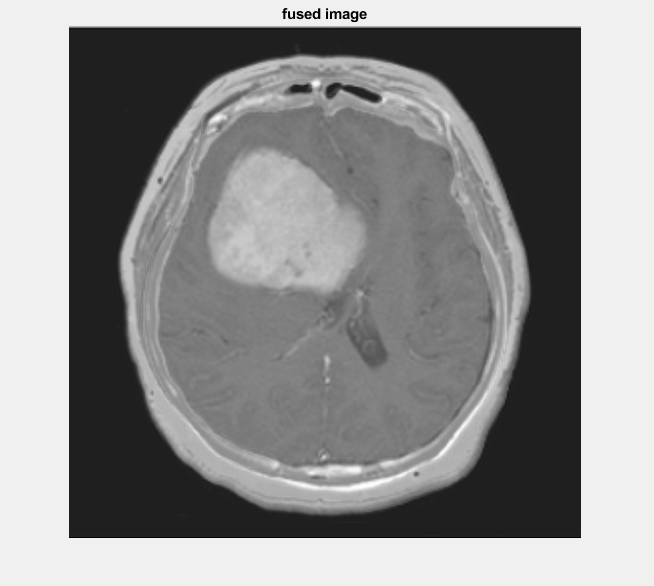

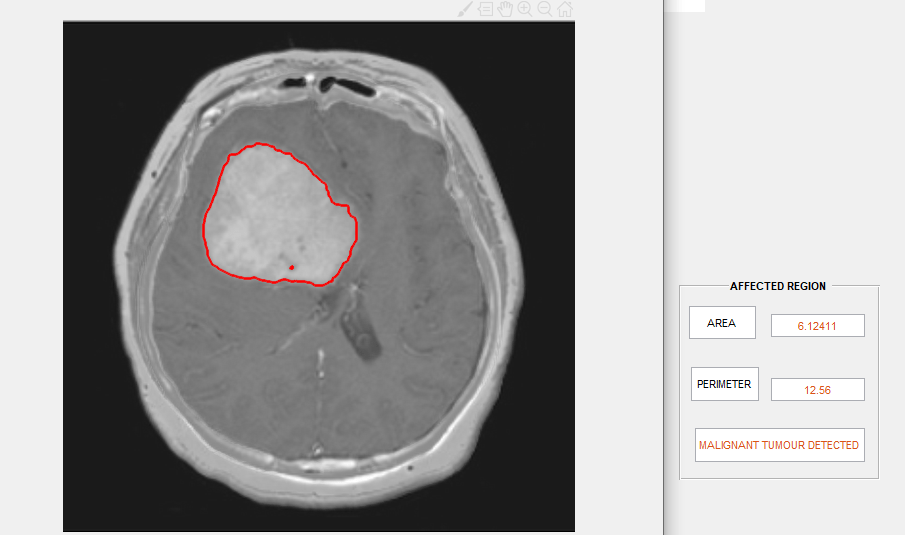

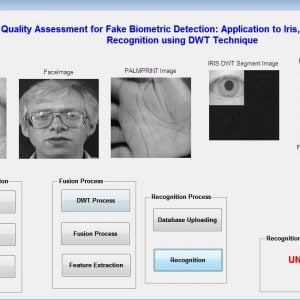

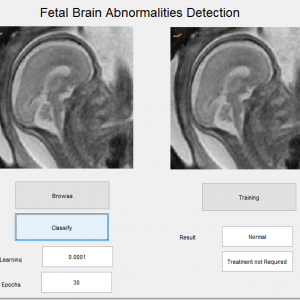

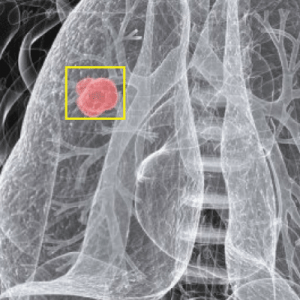

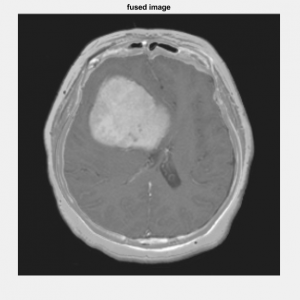

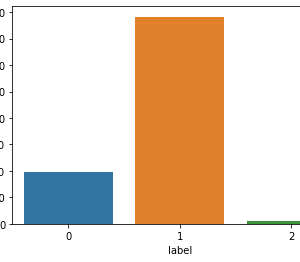

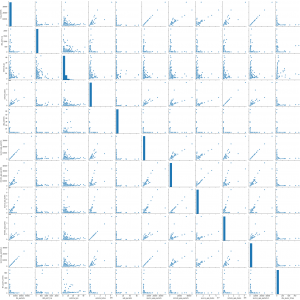

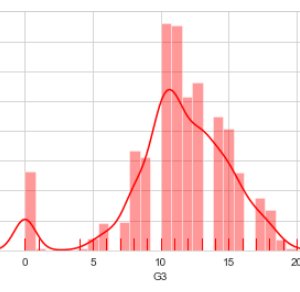

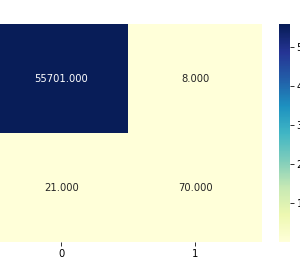

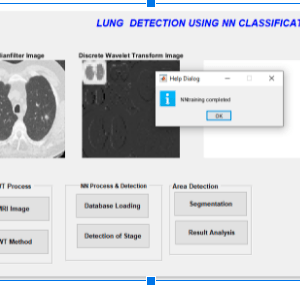

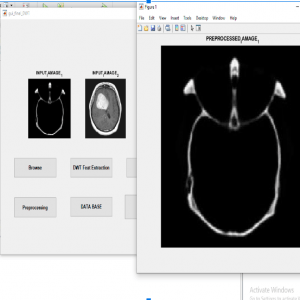

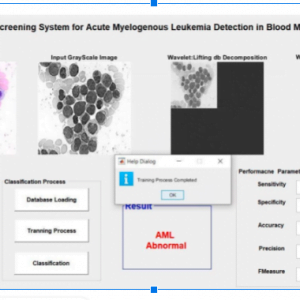

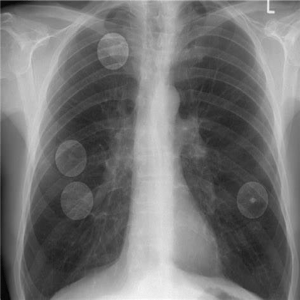

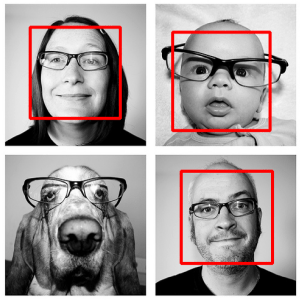

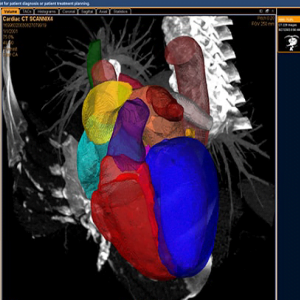

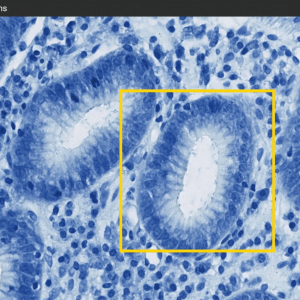

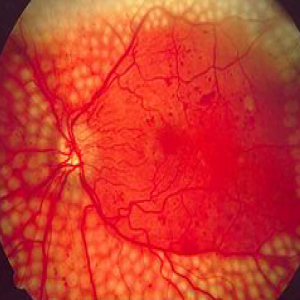

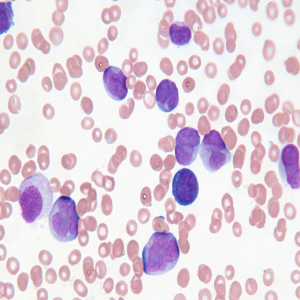

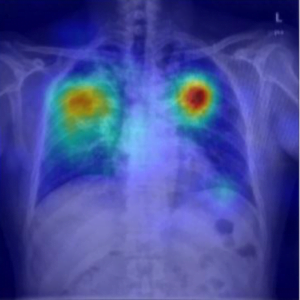

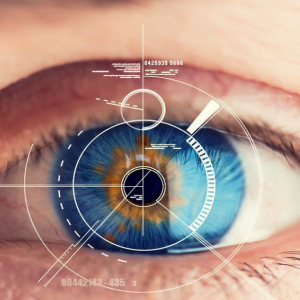

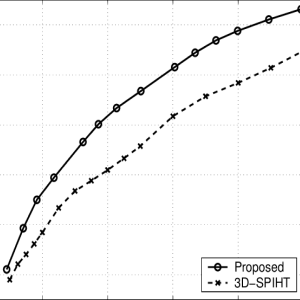

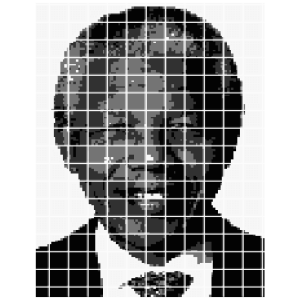

Brain tumor detection is a challenging task in medical image analysis. The manual process performs through domain specialists is a more time-consuming task. Numerous works are represented for brain tumor detection and discrimination but still, there is a need for a fast and efficient technique. In this article, a technique is presented to distinguish between benign and malignant tumors. Our method integrates image fusion, features extraction, and classification methods. A serial fusion of MRI and CT-based techniques are used for feature extraction and fusion by using a Stationary wavelet transform. The fused feature vector is supplied to the multiple classifiers to compare the better prediction rate. The databases are utilized for tumor detection. The performance outcome illustrates that the proposed model effectively classifies the abnormal and normal brain regions.

EXISTING METHOD

- principal component analysis (PCA)

- discrete wavelet transform (DWT)

- dual-tree complex discrete wavelet transform (DTDWT)

- nonsubsampled contourlet transform (NSCT)

- Clustering for segmentation

DRAWBACKS

- Contrast information loss due to averaging method

- The maximizing approach is sensitive to sensor noise

- Spatial distortion is high

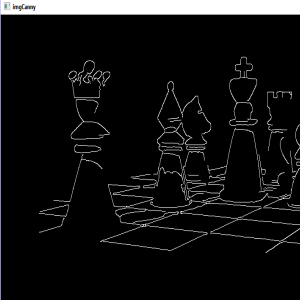

- Limited performance in terms of edge and texture representation

PROPOSED METHOD

- SWT

- ISWT

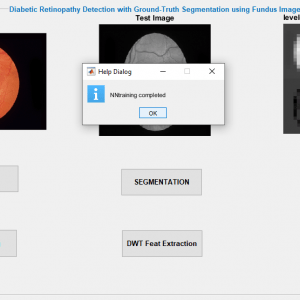

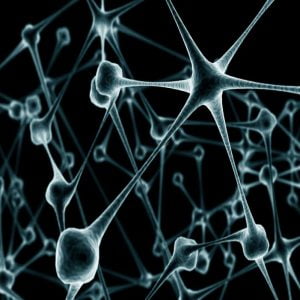

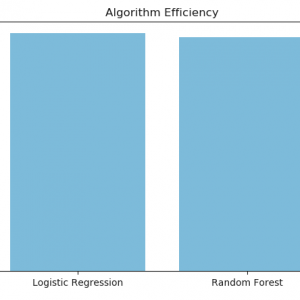

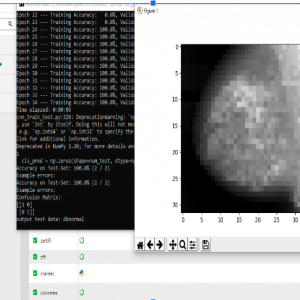

- Neural Network Classifier

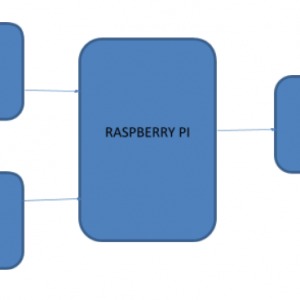

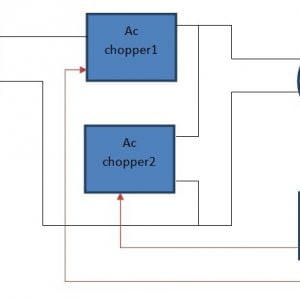

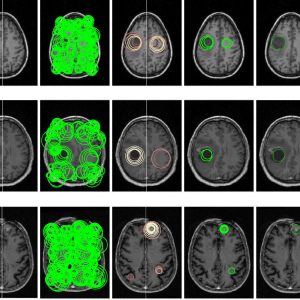

BLOCK DIAGRAM

DRAWBACK

- Contrast information loss due to averaging method

- The maximizing approach is sensitive to sensor noise

- Spatial distortion is high

- Limited performance in terms of edge and texture representation

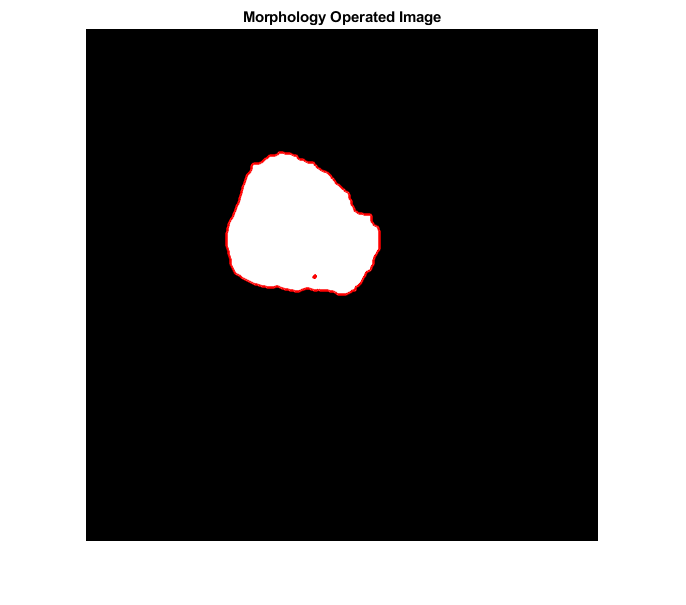

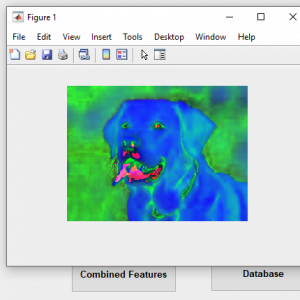

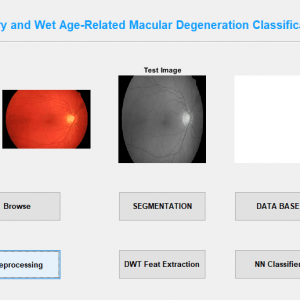

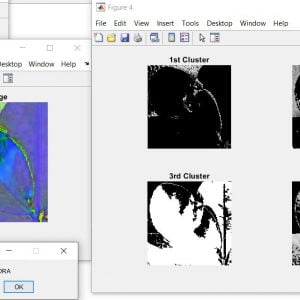

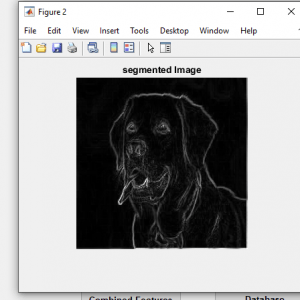

METHODOLOGIES

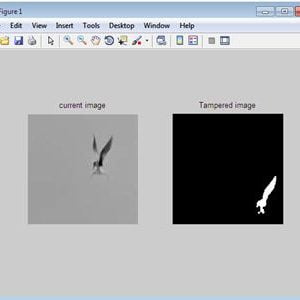

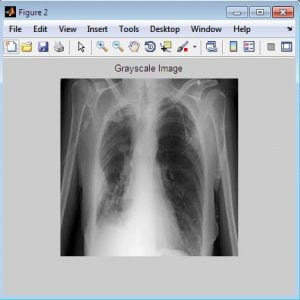

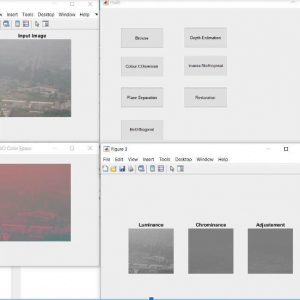

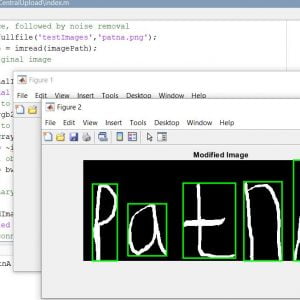

- Pre-Processing

- SWT Fusion

Feature Extraction

- Neural Network Classifier

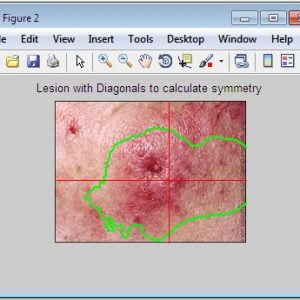

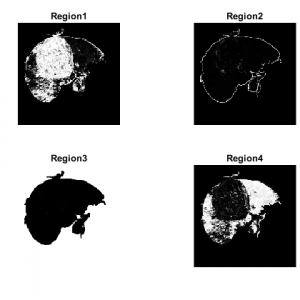

- Segmentation

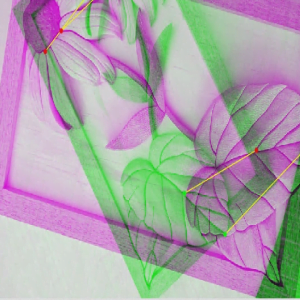

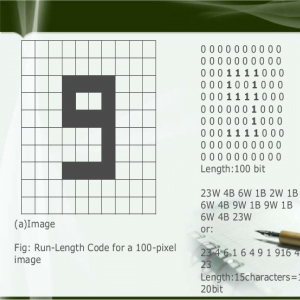

FUSION METHOD

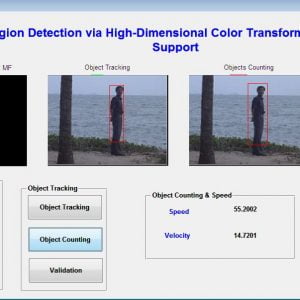

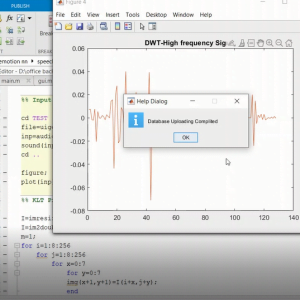

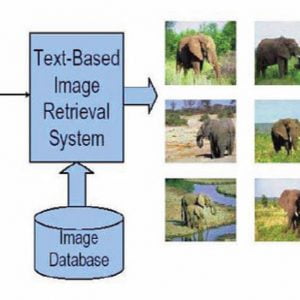

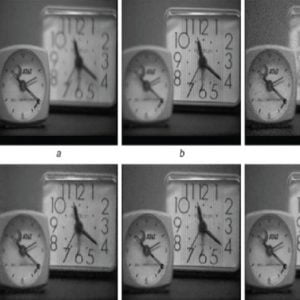

- The proposed method is composed of several parts. First, SWT is applied to decompose all the sources images into a set of sub-images that contain the important features of these sources images. At last, the fusion rule is designed based on consistency verification to effectively fuse the coefficients of different sub-images, and then inverse SWT (ISWT) is carried out to reconstruct the fused image. The detailed introductions of the proposed image fusion scheme are presented in following

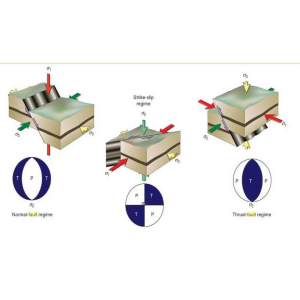

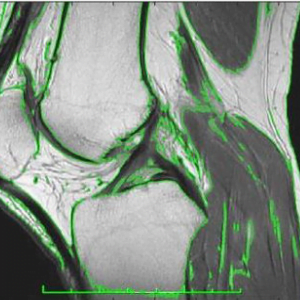

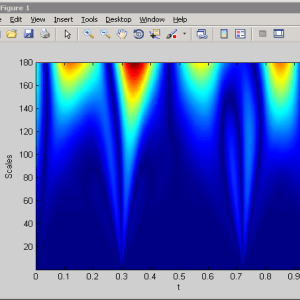

Stationary Wavelet Transform

- When compared with traditional WT, SWT has the properties of shift-invariance and redundancy. It applies an up-sampling filter instead of the down-sampling operation, thus the size of the sub-images in the transform domain does not diminish. SWT can extract the small features in fine scales and the large features in coarse scales by its multi-scale decomposition.

- Therefore, the decomposed sub-images can keep the most information from source images, and this also is the reason that SWT is called? trous algorithm.

Continues

- WT is one of the most often-used multi-scale transform methods in image processing, but it lacks the shift-invariance property, and it is a nonredundant decomposition algorithm.

- ?As a result, SWT is selected in this work due to it can preserve more information on the source images by its redundant properties at each scale. This work employs SWT to decompose the important features of source images into different levels by its multiresolution analysis power.

- the coefficients of approximation of level? represent the horizontal, vertical, and diagonal coefficients of details respectively.

ADVANTAGES

- It reduces the storage cost

- It helps to diagnose diseases

- NSCT provides better edges and texture regions than other transforms?

APPLICATIONS

- Medical Applications

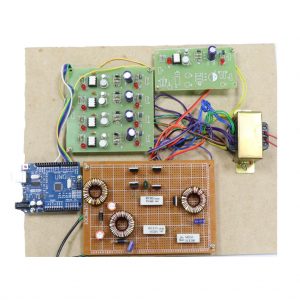

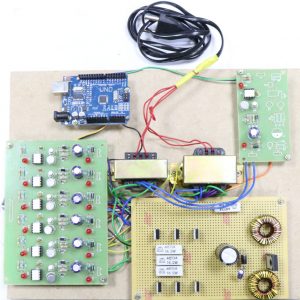

SOFTWARE REQUIREMENT

- MATLAB 2014a or above versions

Brain Tumour Detection With Image Fusion

REFERENCES:

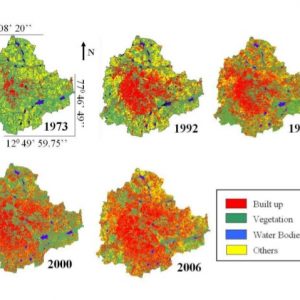

- Y. Gu, Y. Zhang, and J. Zhang,?Integration of spatial? spectral information for resolution enhancement in hyperspectral images,? IEEE Trans. Geosci. Remote Sens., vol. 46, no. 5, pp. 1347?1358, May 2008.

- Y. Zhao, J. Yang, Q. Zhang, L. Song, Y. Cheng, and Q. Pan,?Hyperspectral imagery super-resolution by sparse representation and spectral regularization,? EURASIP J. Adv. Signal Process., vol. 2011, no. 1, pp. 1?10, Oct. 2011.

- T. Akgun, Y. Altunbasak, and R. M. Mersereau, ?Super-resolution reconstruction of hyperspectral images,?IEEE Trans. Image Process., vol. 14, no. 11, pp. 1860?1875, Nov. 2005.

- L. Loncanet al.,?Hyperspectral pansharpening: A review,?IEEE Geosci. Remote Sens. Mag., vol. 3, no. 3, pp. 27?46, Sep. 2015.

- ?P. Chavez, C. Sides, and A. Anderson,?Comparison of three different methods to merge multiresolution and multispectral data- LANDSAT TM and SPOT panchromatic,?Photogramm. Eng. Remote Sens., vol. 57, no. 3, pp. 295? 303, Mar. 1991

Customer Reviews

There are no reviews yet.