Description

Lip Movement Detection using Raspberry Pi

ABSTRACT

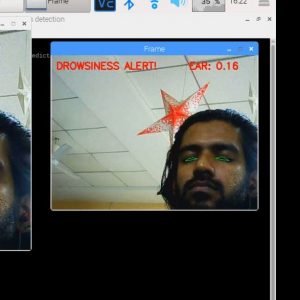

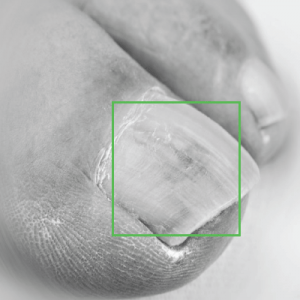

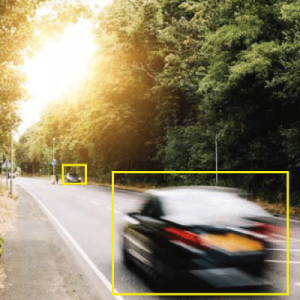

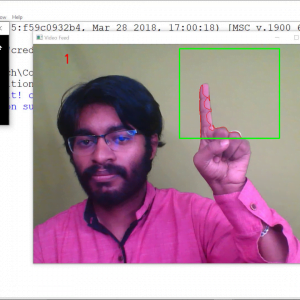

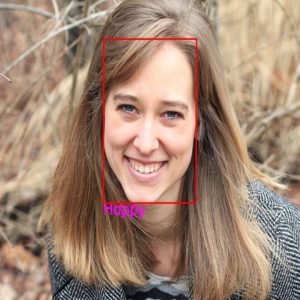

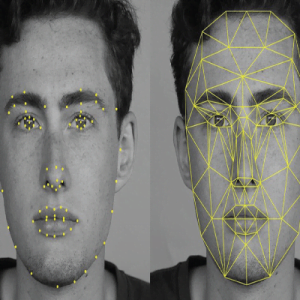

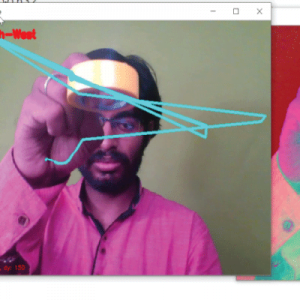

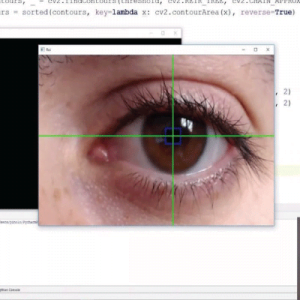

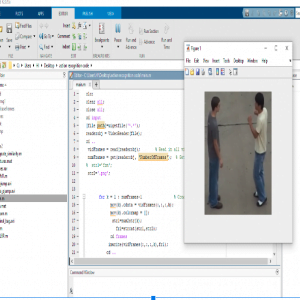

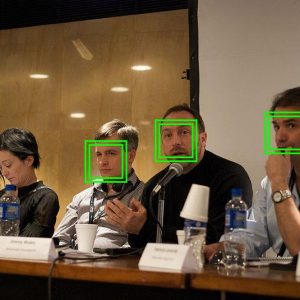

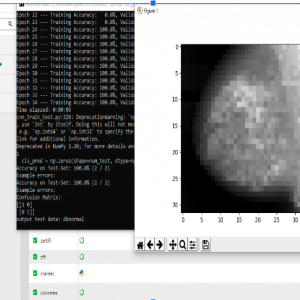

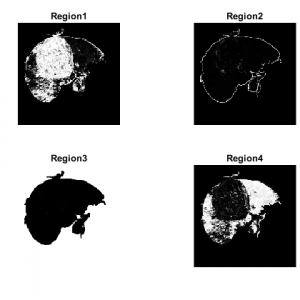

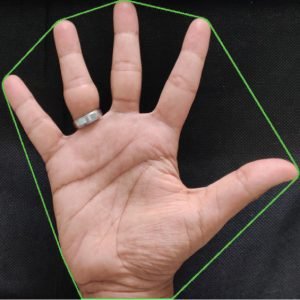

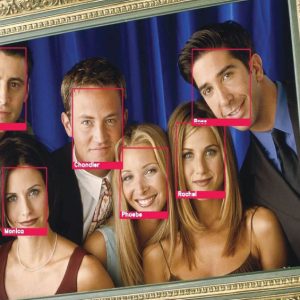

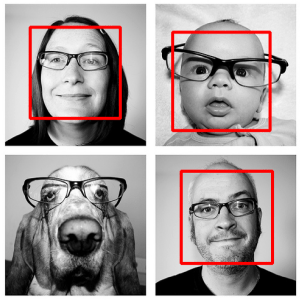

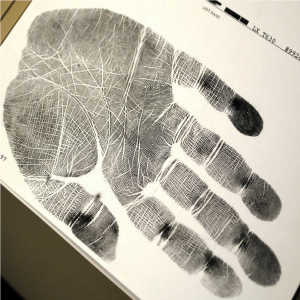

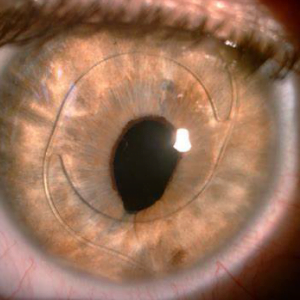

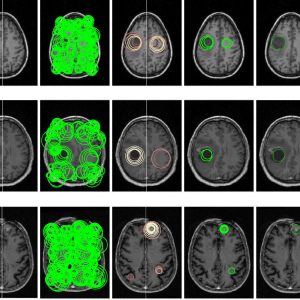

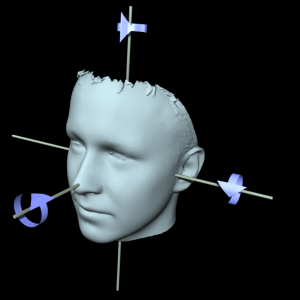

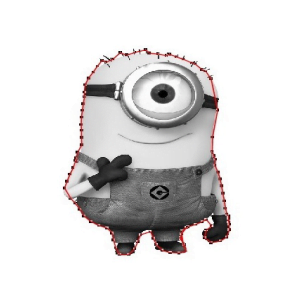

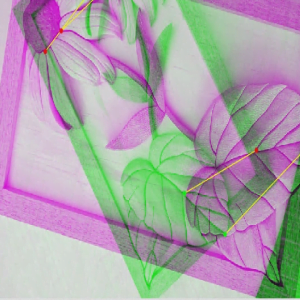

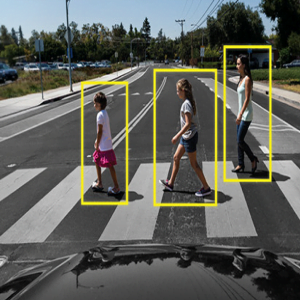

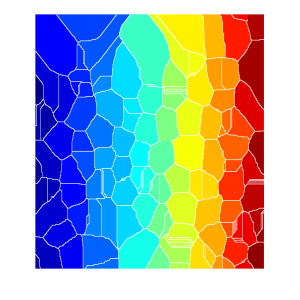

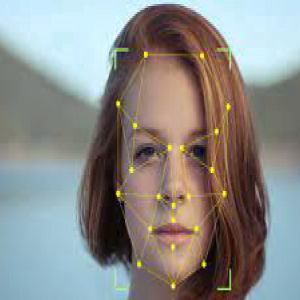

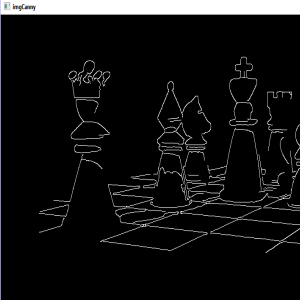

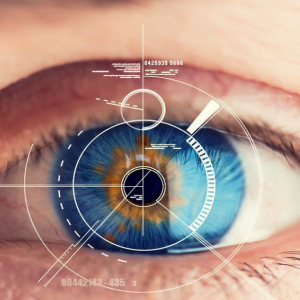

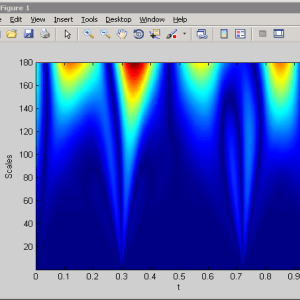

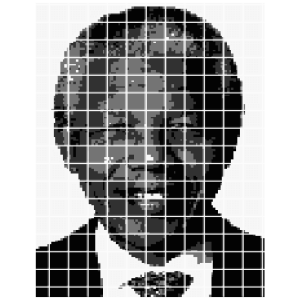

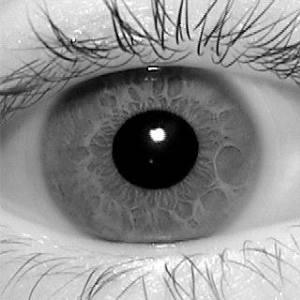

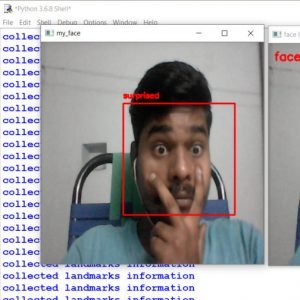

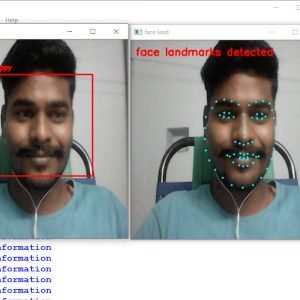

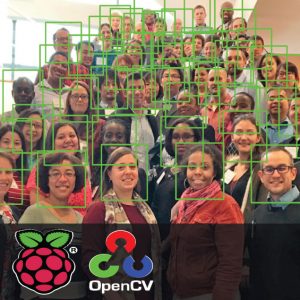

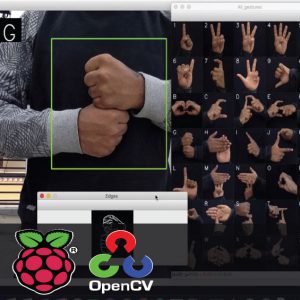

Lip Movement Detection using Raspberry Pi – Most automatic speech recognition systems have concentrated exclusively on the acoustic speech signal, and therefore they are susceptible to acoustic noise. The benefits from visual speech cues have motivated significant interest in automatic lip-reading, which aims at improving automatic speech recognition by exploiting informative visual features of a speaker’s mouth region, which means speaker lip motion stands out as the most linguistically visual feature. In this paper, we present a new improved robust lip location and tracking approach, aims at improving lip-reading accuracy. Lip regions of interest are detected by a new method, combined with Intel Open source (OpenCV). In this new method, we analyze the distribution relationship between faces, eyes and mouth, and then the mouth region can be easily located. It can be proved as an effective method for lip tracking. In the subsequent step, color space is transferred to Lab from RGB color space, and a component of Lab color space is used for extracting lip segmentation and tracking lip region more accurately and efficiently from video sequences of a speaker’s talking face in different lighting conditions, and with different lip shapes and head poses. Extensive experiments show that our proposed method can achieve superior performance to other similar lip tracking approaches, and then can be effectively integrated in lip-reading or visual speech recognition systems.

INTRODUCTION:

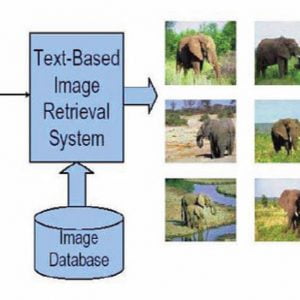

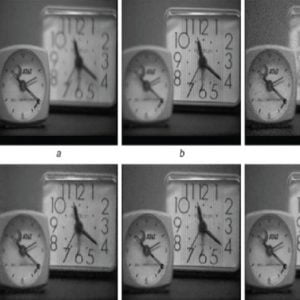

It is well known that visual speech information through lip-reading is very useful for human speech recognition[l]. Hearing-impaired people use lip-reading as a primary source of information for speech communication. Even for those with normal hearing, seeing the speaker’s lip motion is also proven to significantly improve intelligibility, especially under adverse acoustic conditions. A complete lip-reading system can consist of lip location, lip tracking, lip movement extraction, and lip-reading. Lip location, from a face image, is the primary and critical part that its accuracy can affect the performance of following lip tracking, lip movement extraction, and lip-reading. In the previous system, people use the camera to capture only lip region, or lip region is marked manually, but this is not a practical and automatic method and will add limitations and difficulty to lip-reading application. Later, lip location is usually based on face detection. There have been some methods to locate lip regions after face detection. One is to roughly locate the mouth region (region of interest) according to the distribution feature of the mouth in the face region. This method usually considers half the size of the face width as the width of the mouth region, and one-third of the face height as the height of the mouth region. The advantage of this approach is that it is a simple and fast mouth location method, but it will lose effectiveness for those images with different head poses and lip shapes. Another approach is called the gray projection. In this approach, the image is projected to the horizontal and vertical axis, mouth region is defined by valleys of the horizontal and vertical curves. The mouth region can be easily defined in this method, however, the accuracy can be easily affected by bad lighting conditions, low discrimination in lip and skin color, and beard around the mouth. Lip tracking is precise lip segmentation based on lip location. historically, there have been two main approaches in lip tracking from image sequences. The first method is called the color-based approach. In this approach, different color space is proposed, for example, ROB [1], HSV. Red Exclusion is an effective approach to extract the lip, in this approach, the mouth is tracked by using 0 and B color components, but it is only useful for white people. Another color-based approach [2] is proposed to extract the lip region by analyzing the color distribution of lip and skin. But the drawback is it is only useful for specified skin color, yellow skin, or white skin and does not take the beard and tooth into consideration. Lip Movement Detection using Raspberry Pi

PROPOSED SYSTEM:

Lip reading is used to understand or interpret speech without hearing it, a technique especially mastered by people with hearing difficulties. The ability to lip-read enables a person with a hearing impairment to communicate with others and to engage in social activities, which otherwise would be difficult. Recent advances in the fields of computers.

Advantages

- Proposed is mostly helpful for the handicapped persons like deaf people. They can express their feeling easily by giving lip moments.

- Easy to Install and the cost of installation is also very less.

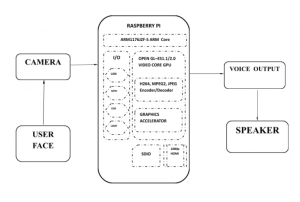

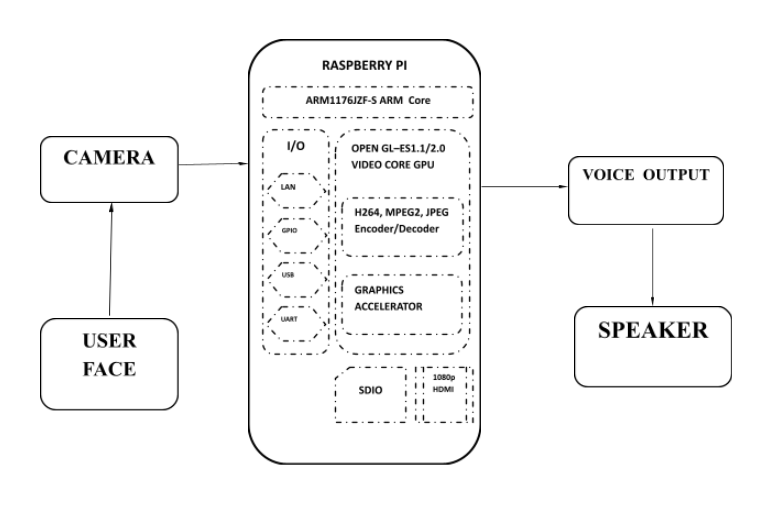

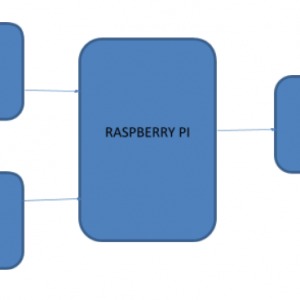

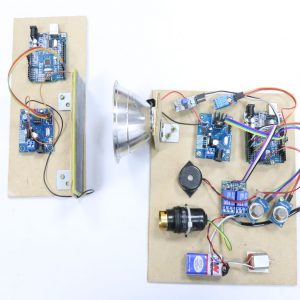

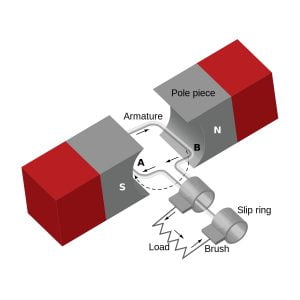

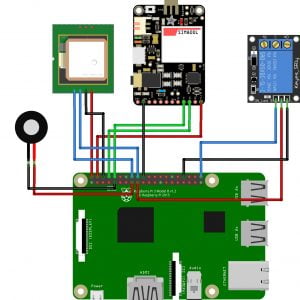

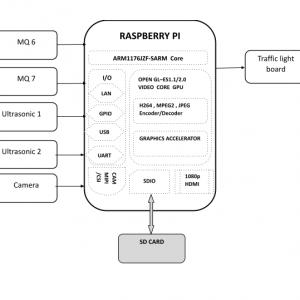

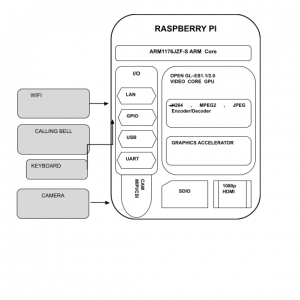

BLOCK DIAGRAM:

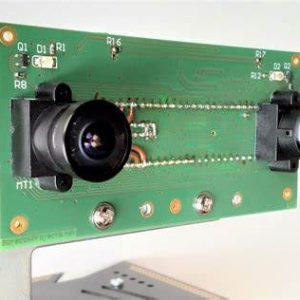

CAM

MIPI/CSI

BLOCK DIAGRAM EXPLANATION:

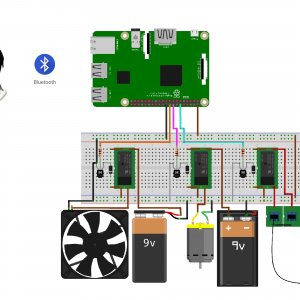

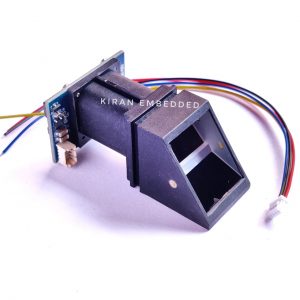

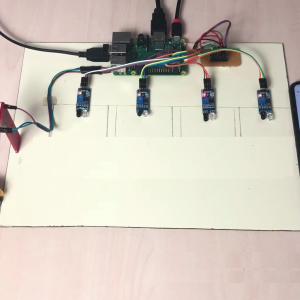

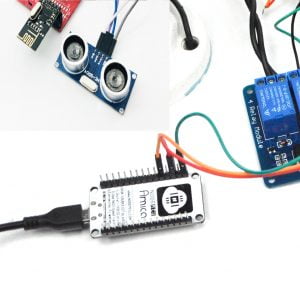

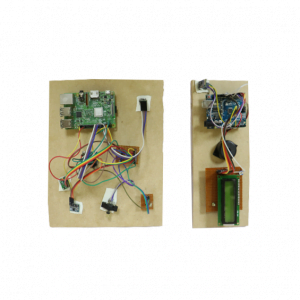

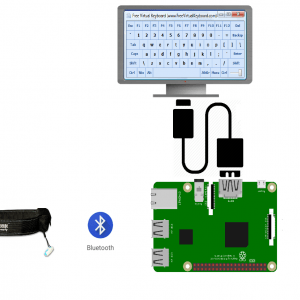

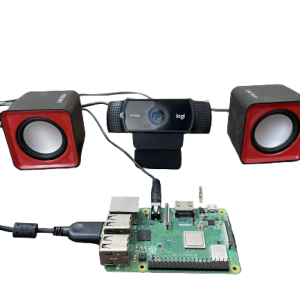

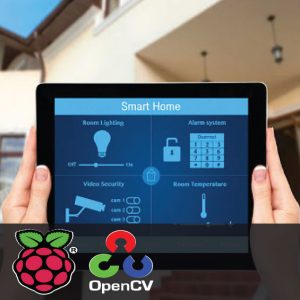

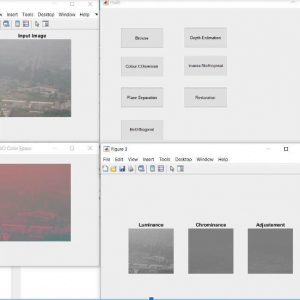

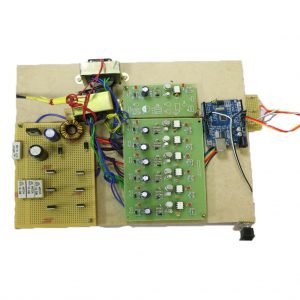

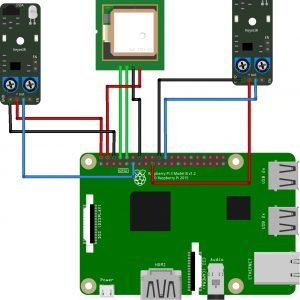

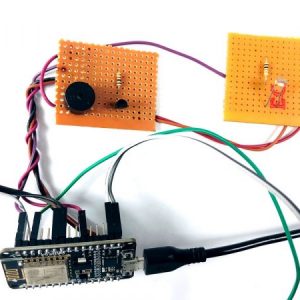

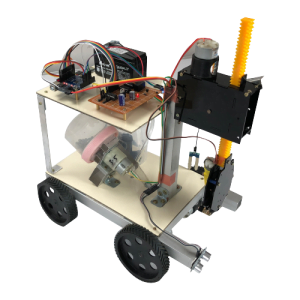

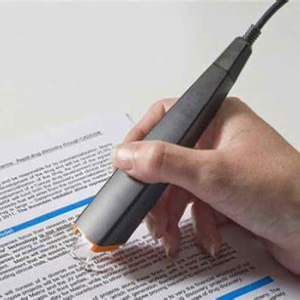

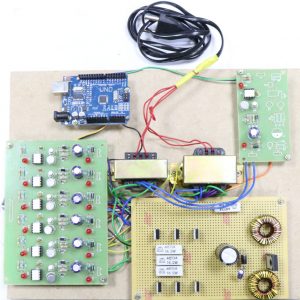

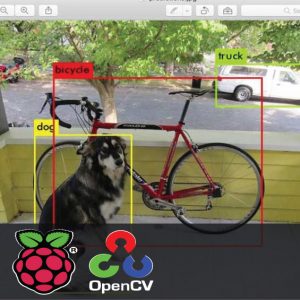

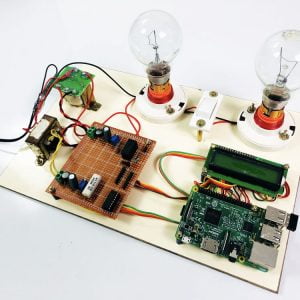

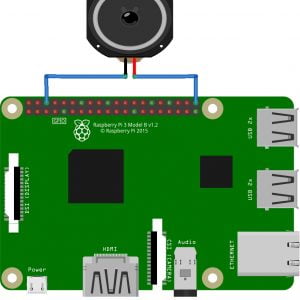

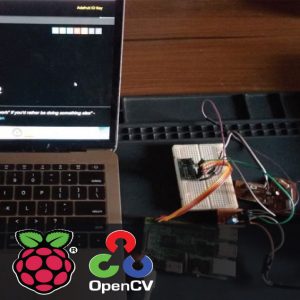

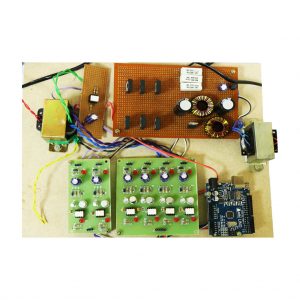

In this system Raspberry acts as a Heart of the System, Camera was interfaced with Raspberry pi. Camera the lip moment from the users, based on the lip moment voice output will come through speakers. Here Open cv technology is used to capture the lip moment using the camera.

- Camera-To Capture the lip moments from the users

PROJECT DESCRIPTION:

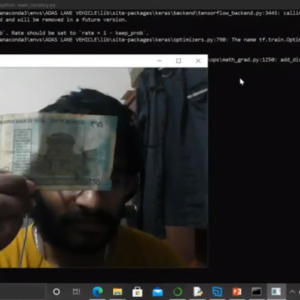

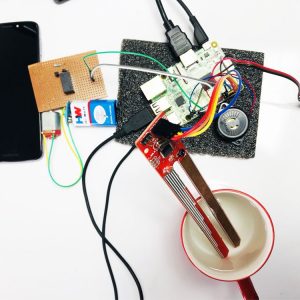

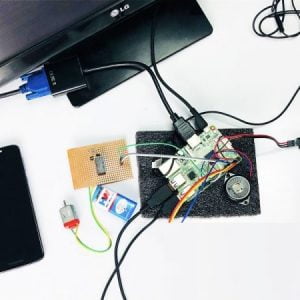

The camera is connected to the Raspberry pi one the Program is Executed it wait for an interrupt, the camera will capture the lip moments from the users, Open cv technology is used to capture the lip moment from the users. All the lip motions captured by the camera will be fed to the Raspberry pi microcontroller, Raspberry pi controller analyzes the lip moment from the camera and gives the voice output using speakers. This proposed system is mostly used for handicapped and aged people. Lip Movement Detection using Raspberry Pi

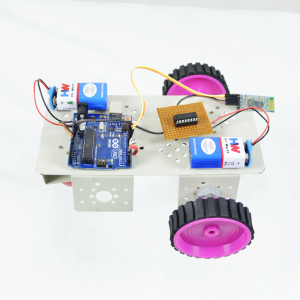

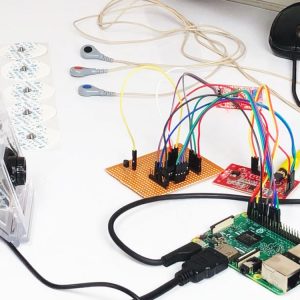

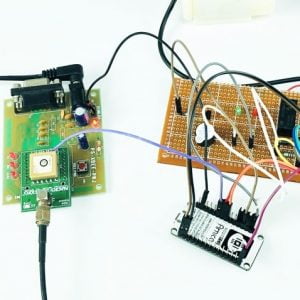

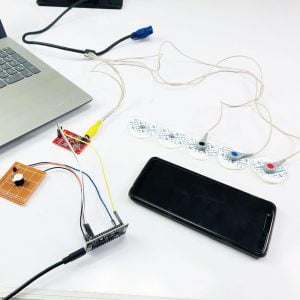

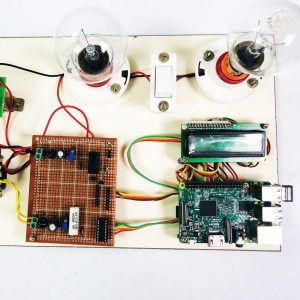

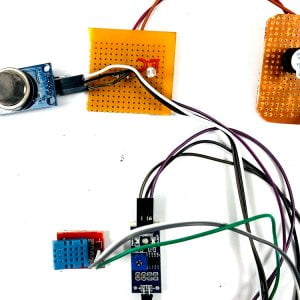

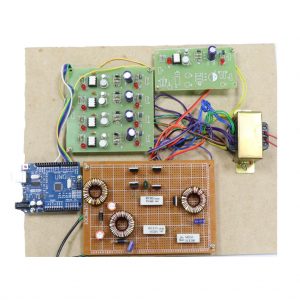

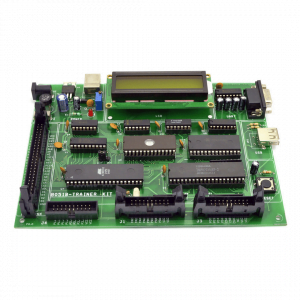

HARDWARE REQUIREMENT:

- Raspberry pi

- Camera

- speakers

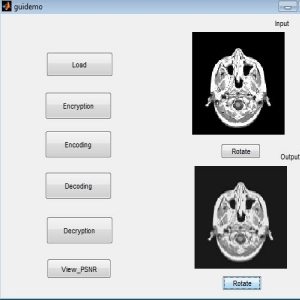

SOFTWARE REQUIRED:

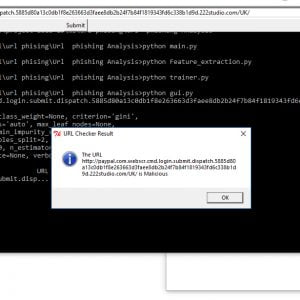

- Python

- Raspbian Jessie

- OpenCV

CONCLUSION:

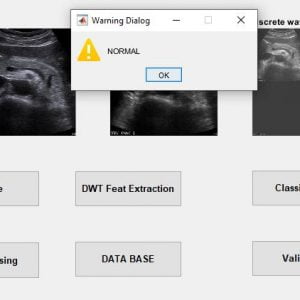

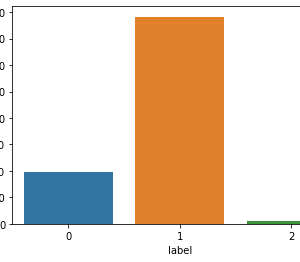

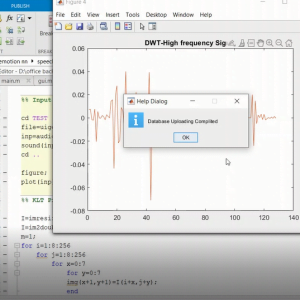

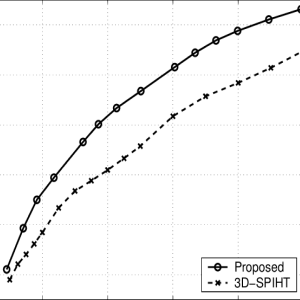

In this paper, we describe a new robust approach to improve lip localization and tracking. The first part of our proposed algorithm is lip location based on OpenCV. From experimental results, our proposed method can successfully detect the lip region. Results from the lip location accuracy allowed more accurate lip region segmentation. In the second part,? fed these lip moments to the Raspberry pi controller is proposed to accurately extract lip shape and track lip region. Overall, our implemented approach has shown high reliability and is able to perform robustly under various conditions. Lip Movement Detection using Raspberry Pi

REFERENCES

[I] Lewis.T.W, Powers.D.M., “Lip Feature Extraction Using Red Exclusion”, Proc. Selected papers from Pan-Sydney Workshop on Visual Information Processing, pp.61-67, 2000.?

[2] Zhang Zhi-wen, Shen Hai-bin, “Lip detecting algorithm based on chroma distribution diversity”, zhejiang university journal, 2008,42(8):pp 1355-1359?

[3] Xie Lei, Cai Xiu-Li, Fu Zhong-Hua, “A robust hierarchical LIP tracking approach for lipreading and audio visual speech recognition”, Proc. Int. Conf. Mach.Learning Cybernetics, 2004, 6:3620-3624.?

[4] R.L.Hsu, M .Abdel, A.K. Jain, “Face Detection in Color Images”, IEEE Trans. on Pattern Analysis & Machine Intelligence, 2002.?

[5] A.Hulbert and T.Poggio, “Synthesizing a Color Algorithm From Examples”, Science, vol. 239, pp. 482-485, 1998. [6] Liang Ya-ling, Du Ming-hui, “Lip Extraction Based on a component of Lab Color Space”.

Customer Reviews

There are no reviews yet.